Share Post:

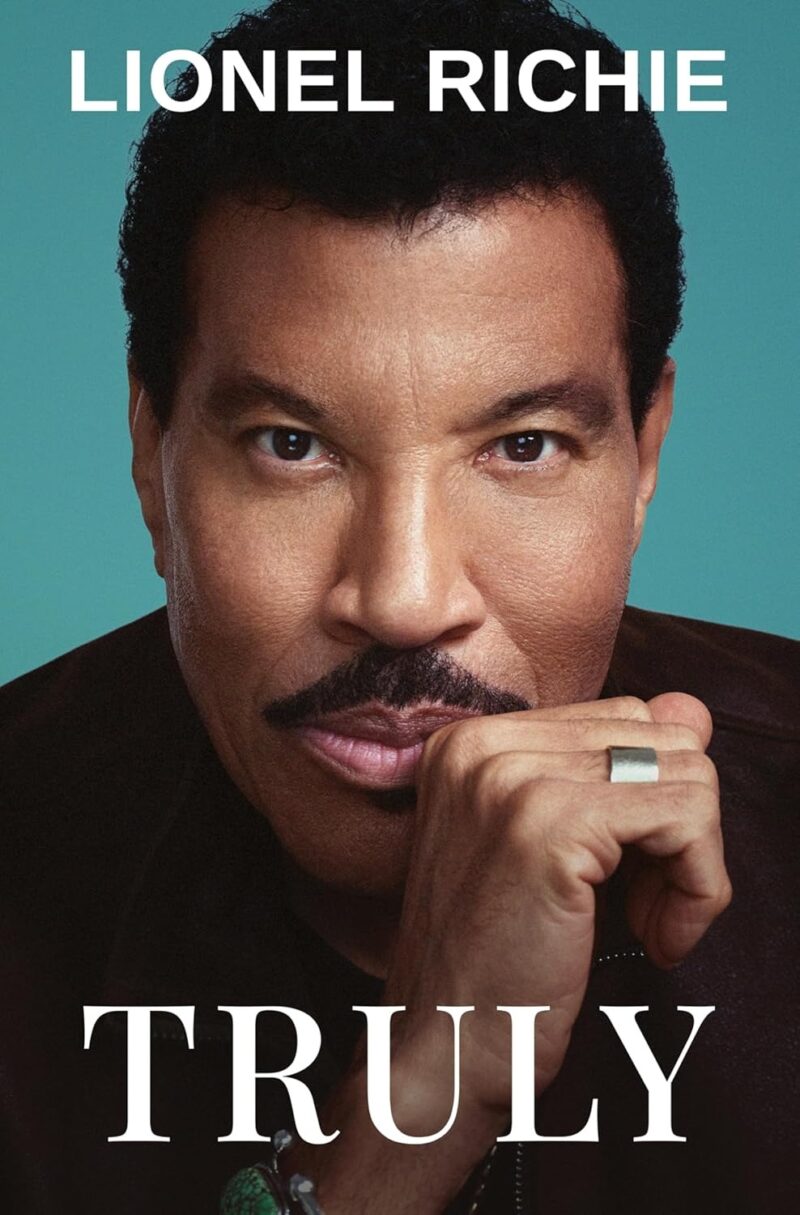

Stephen Witt’s The Thinking Machine lands in that rare zone where a business book feels like a reported narrative, technical briefing, and strategy lesson all at once.

You move through Jensen Huang’s world with enough color to enjoy the story and enough specificity to see how Nvidia turned into the defining infrastructure supplier of the AI wave.

The book focuses on people, engineering choices, internal pressure, and the deliberate stacking of decisions that made the company hard to compete with once the tide turned in their favor.

Readers often expect a tidy origin myth. Witt gives something different. You see a pattern of decisions repeated year after year, shaped by Huang’s intensity and the company’s instinct for long-cycle bets.

It is less about one breakthrough moment and more about years of building a foundation that would eventually hold enormous weight when the world’s computing needs changed.

The only real limitation is structural. Reviewers point out the narrative cuts off around mid-2024, so later market shocks and supply-chain swings land outside the book’s frame. That is not a dealbreaker, but it does mean you read it as an origin account rather than a full present-day analysis.

What follows is a review, then a set of strategy lessons rooted in Nvidia’s current scale and the company’s own filings and disclosures.

Table of Contents

ToggleWhat the Book Is Really About

On the surface, you get a founder biography. In practice, you get a clear record of how Nvidia identified a curve in the computing world and placed itself where demand eventually concentrated.

The reporting aligns with The Guardian’s framing of Nvidia’s shift: Moore’s Law started slowing, and Huang made a decisive bet on a different computing model, reorganizing the company around parallelism.

The book shows how Nvidia built its advantage through reinforcing moves rather than one magical insight. A few patterns jump out because they repeat across the company’s decisions.

You can see echoes of disciplined board oversight in Nvidia’s approach, something that aligns closely with the leadership frameworks outlined in the Non-Executive Knowledge Centre.

Reinforcing Choice 1: A Computing Model Ready for New Workloads

Parallelism was not a marketing idea. It was a real architectural pivot aimed at workloads that were only beginning to show up in the mid-2000s.

Training early versions of neural networks, running physics simulations, handling graphics workloads that needed scale, all of it stacked nicely on a parallel engine. Nvidia leaned into that engine before it was fashionable.

Reinforcing Choice 2: A Developer Platform That Made the Model Usable

CUDA is the anchor here. Nvidia introduced CUDA in November 2006 as a general-purpose parallel computing platform and programming model. That sounds basic today.

At the time, it was closer to a bet on developer behavior, education, and toolchain maturity. CUDA was not just a way to program GPUs; it was a way to turn a piece of silicon into a full workflow.

CUDA-X libraries, documentation, SDKs, and domain-specific frameworks completed the picture. Developers learned one abstraction, not ten.

Reinforcing Choice 3: A Packaging Strategy That Removed Friction for Enterprise Buyers

Witt’s reporting hints at the cultural force behind this move. Huang was relentless about building systems rather than isolated chips. The company slowly moved from selling components to selling outcomes.

DGX systems, networking, reference architectures, and enterprise software all of it pointed customers toward a single decision: buy the full stack because it works out of the box.

Reinforcing Choice 4: Cadence That Turned Into Customer Confidence

Nvidia names its architectures, publishes roadmaps, and treats each generation as a repeatable event. It helps buyers plan.

It also creates rhythm inside the company. Ship the next architecture. Ship the next software layers. Ship the next developer tools. Keep the loop going.

Reinforcing Choice 5: A Culture Built for Speed, Accuracy, and Technical Pressure

Witt portrays a culture driven by precision and intensity. Business Insider’s reporting around the book highlights public critique, directness, and a style that can push people hard. It can also produce momentum when the workload demands clarity and courage.

Put together, the story is not about charisma or luck. It is about a company that learned to build second-order benefits into its products and processes.

Nvidia’s Core Strategic Move to Turn Hardware Into a Platform

A small line in Nvidia’s fiscal 2025 annual filing captures how the company sees itself. Nvidia calls its ecosystem an accelerated computing platform. GPUs form the foundation. DPUs and CPUs extend it.

Software makes it full-stack. CUDA plus CUDA-X libraries sit at the center, with paid software suites like NVIDIA AI Enterprise and managed services like DGX Cloud on top.

That phrasing is not window dressing. It reflects the strategic spine of the company.

The Platform Playbook

A few moves define how Nvidia operationalized that identity.

- Own the abstraction developers depend on

- Lower the friction until using your stack feels natural

- Push integration upward so the buyer adopts systems, not parts

- Keep cadence tight so no one wonders when the next leap is coming

CUDA is the clearest example of that playbook. It took years of investment in training materials, optimization libraries, community support, and documentation. During those years, the market for deep learning was still forming.

When the spike hit, Nvidia had something competitors could not replicate quickly: an ecosystem with institutional memory.

Platform Is a Product Category, Not a Slogan

Companies often call themselves platforms. Very few operate like one. Nvidia behaves like platform-building is the real product.

Key Signals

- Elevated documentation quality

- Libraries tuned to real workloads

- Migration paths for developers who need stability

- Procurement-ready enterprise packaging

Startups tend to think “platform” means broad surface area. Nvidia shows a different version: platform means deep control over the first abstraction your user touches.

When customers build inside your abstraction, you get retention that does not break when competitors match features. When they simply “use” your product, switching becomes a spreadsheet exercise.

The Full-Stack Move

One of the book’s strongest insights is how early Nvidia realized it needed to build systems, not isolated accelerators. The shift accelerates once you read the company’s disclosures.

The Blackwell architecture is described as a data center-scale infrastructure spanning GPUs, CPUs, DPUs, interconnect, switch chips, systems, and networking adapters. Not a chip. A system.

A bundle of decisions cooked into something buyers can deploy without running a scavenger hunt across vendors.

Large buyers need reliability, speed, and an easy handoff from planning to deployment. Nvidia fills the awkward integration layer that slows adoption. That is where its scale snowballs.

Scale Lesson: Reduce Customer Integration Risk

A company scales faster when customers can adopt without reinventing the wheel. Nvidia repeatedly invests in the pieces that make adoption smooth.

Examples:

- Hardware plus networking plus system-level design

- Enterprise software bundles with support

- Cloud-managed offerings for customers who want speed

- Prescriptive benchmarks instead of vague claims

For an early-stage founder, the lesson is not “build everything.” It is own the ugly middle. The part customers hate dealing with. Package that pain into something clean.

A Quick Numbers Snapshot to Anchor the Discussion

Here is a table showing how the book’s long build-up maps to the current observable scale.

| Metric | What it signals |

| Q3 FY2026 revenue: $57.0B | demand at infrastructure scale |

| Q3 FY2026 Data Center revenue: $51.2B | data center is the engine |

| FY2025 repurchases: $34.0B | cash generation supporting massive capital return |

| FY2025 Data Center revenue up 142 percent YoY | demand acceleration |

| First company to surpass $5T valuation (Oct 2025) | market belief in durability |

What Nvidia’s Story Teaches About Strategy

Below are the strategy insights you can extract from the book’s reporting and Nvidia’s own disclosures.

1. Build for the Workload That Is Coming, Then Hold Your Nerve

Huang’s bet on parallelism came before the world fully needed it. Most firms struggle to invest that far ahead because:

- Revenue attribution looks weak in early years

- Tooling support feels expensive

- Leadership often abandons long bets after one miss

Nvidia survived by building core primitives and then treating developer adoption as a multi-year movement.

CUDA changed how developers wrote code. That alone built loyalty. Nvidia’s own documentation archive shows CUDA launched in November 2006 as a general-purpose model, and the company steadily extended it into a full software body with CUDA-X and domain frameworks. A developer platform compounds when: Nvidia expanded from GPUs into DPUs, CPUs, storage paths, networking, and entire systems. The pattern is simple. That is not ambition. That is customer-driven expansion. Nvidia’s architecture names matter because they signal forward motion. Hyperscalers and enterprises plan multi-year deployment cycles around roadmaps. Nvidia publishes enough detail for those teams to commit. The book focuses on culture and decisions. Current filings reveal the weight of the machine that grew from those decisions. At Nvidia’s current scale, success depends on manufacturing allocation, packaging capacity, networking component supply, memory availability, and global delivery. The flywheel is operational, not rhetorical. Nvidia’s Q3 FY2026 earnings release also highlights $37.0B in shareholder returns in the first nine months of the fiscal year, with $62.2B left under authorization. Few companies can support operational expansion and massive capital return simultaneously. That signals credibility in execution. Witt’s portrait shows a company driven by precision and pressure. Business Insider’s reporting highlights sharp critique, direct communication, and moments where Huang rejects speculative futurism in favor of engineering realism. Intensity helps speed. Intensity also risks burnout, retention issues, and leadership bottlenecks. Review roundups note the book ends in mid-2024. Markets shifted afterward. Supply changed. AI demand grew. Blackwell supply constraints and sellout conditions became real factors. The book remains accurate for its time frame, but supplement it with current primary sources when thinking about Nvidia’s present-day position. The Thinking Machine works because it captures Nvidia’s rise as a deliberate engineering and strategy project developed over decades. The company built parallel computing momentum, nurtured developer habits, expanded into systems, and created a roadmap cadence that enterprise buyers now depend on. If you want today’s scale picture, Nvidia’s filings and earnings releases tell the story directly. Quarterly revenue moving past $57.0B, Data Center revenue at $51.2B, repurchases of $34.0B in fiscal 2025, and a valuation above $5T show a company operating at a level few corporations have ever reached. If you want lessons you can apply, the clearest ones are about compounding. Build the abstraction. Shape the workflow. Own the integration. Ship consistently. Set a cadence buyers can trust for years. That is the core of Nvidia’s strategy story, and Witt’s book captures how long it took to build the machine we see today.2. Treat Developers as a Compounding Distribution Channel

3. Move up the Stack When Customer Friction Demands It

4. Use Cadence to Create Buyer Confidence

What Nvidia’s Story Teaches About Scaling Operations

1. Scale Is Supply Chain Plus Credibility

2. Culture That Ships Can Win, but Intensity Comes With a Cost

3. Beware Narrative Cutoffs

Bottom Line

Related Posts: